- Initial context

- Objective decomposition

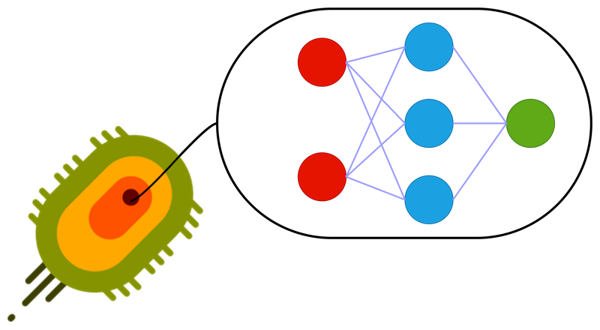

- Bacteria brain

- Adaptation of genetic algorithm to bacteria’s brains

- What’s next?

Being a student I was pretty interested in cases when some enthusiasts did experiments with neural networks and simulations of different kind. It was like a kind of magic: of course like IT and math guy I understood that underlying ideas and mechanisms are pretty simple and obvious. But when you see how a system evolves and become “looking smart” or what interesting and at the same time funny and unpredictable but effective could appear during process of synthetic evolution you rejoice like a child.

Initial context

When I decided to take part of AI simulations world I found nothing more obvious than to make one more “life” simulation („• ᴗ •„). So the context was a simple: there are species (bacteria) which need to find a food and eat. Visually there should be an area, where group of bacteria and limited amount of food are randomly spawned. For simplicity bacteria are immortal and their count is fixed. As for food, when piece of food is eaten new piece should randomly placed within the simulation area. To summarize: main goal of simulation is to “teach” bacteria to locate the food and to get to it faster than other bacteria. “Teach” term in current context is not correct by the way as species should learn themselves, I just need to tell them what to learn, what is good and what is not. Firstly, it’s necessary to understand what math tools could help to solve given objective. And this is a topic of the post.

Objective decomposition

To solve this abstract problem two main pieces required:

- to be able to learn a bacteria should have a “brain”

- for bacteria to become better it should be placed in environment with a tournament, without it there will be no stimulus to evolve

* As a newcomer in this area I decided to select the simplest solutions

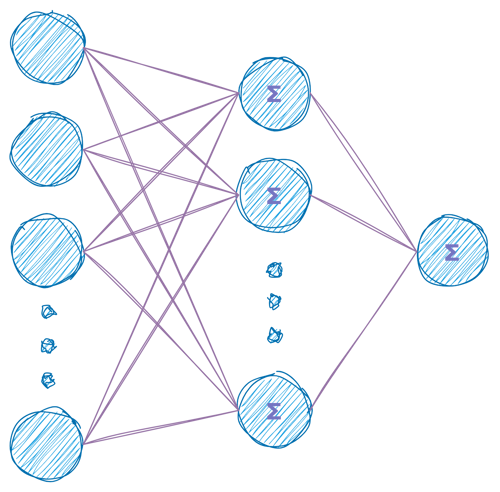

Neural networks

Simple model of bacteria’s brain is a feed-forward three-layer neural network, where:

- Input layer retrieving some input excitation and transmitting it to next layer

- Hidden layer has neurons with an activation function. Each such neuron collects an input data from all neuron input synapses (weights) and determines an output applying non-linear transformation to the weights

- Output layer also has neurons with activation functions which collect signal from hidden layer and also generate final neural network output (there could be more than one output signal)

In current context 3 types of activation functions will be used:

-

Linear function

\(f(x) = ax + b\) -

Sigma function

\(f(x) = \frac {a} {b + (-cx)^e + 1}\) -

Signum function

\(f(x) = \begin{cases} 1, x > a\\ 0, x \leq a \end{cases}\)

Genetic algorithm

Tournament evolution mechanism could be realized via genetic algorithm. In simple words genetic algorithm could be described in next terms:

- There is a population of species (chromosomes)

- Each chromosome has a genome - sequence of data (genes) fully describing its uniqueness and behavior

- Chromosome can mutate. Mutation is a random change of genes in genome. Mutation could be “good” (improve characteristics) or “bad” (degrade them)

- Chromosomes can crossover. Crossover is a random exchange of genome parts between two chromosomes

- There is an environment with a so-called fitness function. The fitness function describes some kind of success criteria for population chromosomes and by the fact guide direction of evolution. The function is calculated for each chromosome in population and could tell for each specific chromosome is it good in context of given environment and it’s goals and how good is it

- The environment has evolution cycles. During each cycle chromosomes try to reach evolution goals

- After each evolution cycle the population is sorted based on fitness function value and best chromosomes stay in population for next cycle, while other (no so successful) chromosomes die

- Survivors mutate and crossover forming new population

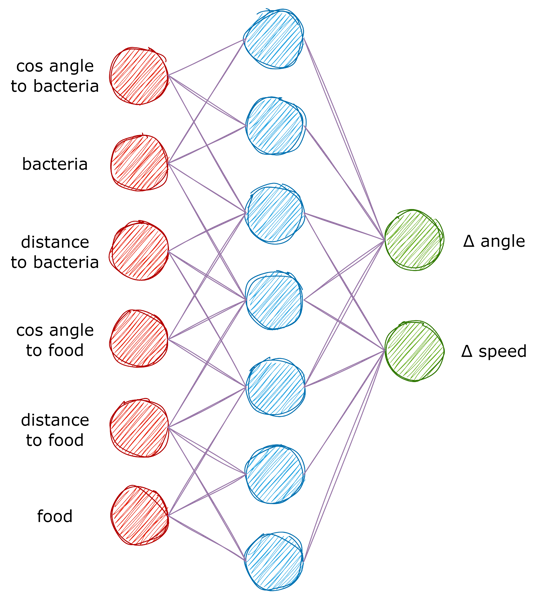

Bacteria brain

Now let’s apply an abstract neural network tooling to design a brain for a bacteria. To achieve this it’s necessary to understand what input excitations can affect the bacteria and what neural outputs needed to achieve this goal. In terms of movement in 2D plane only speed + movement angle needed, so there will be 2 output neurons in the output layer. Now let’s talk about inputs, bacteria should receive next signals:

- Food availability in neighborhood area

- Distance to the food

- Cosine of angle between bacteria movement vector and vector pointing to the food

- Presence of other bacteria (competitors) in neighborhood area

- Distance to the other bacteria

- Cosine of angle between bacteria movement vector and vector pointing to the other bacteria

* Bacteria could see only in front of itself

And final bacteria brain will look like

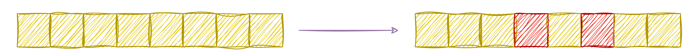

Adaptation of genetic algorithm to bacteria’s brains

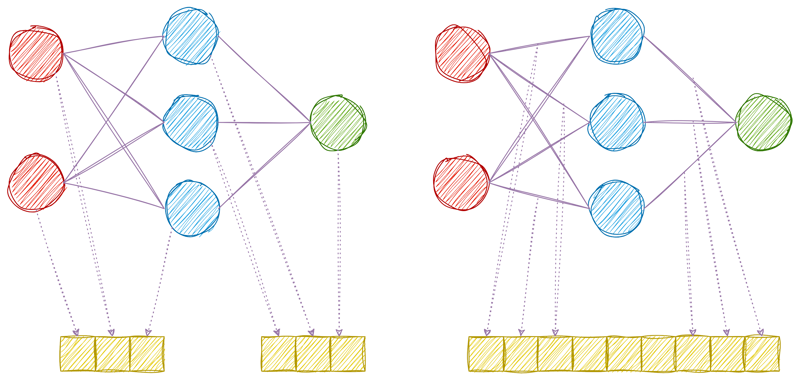

Now need to make designed brain a chromosome in genetic algorithm.

As I mentioned before, a population consists of bunch of chromosomes which could mutate and crossover. So bacteria brains should be somehow be transformed to linear genome chain. In neural networks two types of entities could be part of genome: neurons and synapses and they need to be linearized. Example of linearization below:

After processes of mutation and crossover the neural network is reconstructed back from linear genome.

Mutation

As mentioned above neural network has two entities: synapses and neurons (both of them can mutate). Synapses (if to be more specific, weights) can mutate in several ways, I chosen two approaches for the simulation:

- mutate weights by changing their values not significantly

- shuffle existing weights within some range

Neurons could also mutate in different ways from which I again chosen two:

- mutate activation function parameters by changing their values not significantly

- mutate activation function itself (i.e. change one activation function to another)

When mutation time has come one of abovementioned approaches is chosen randomly.

* Actually, there is one more way to mutate: change neural network topology, but I decided to skip this approach for now due to its complexity.

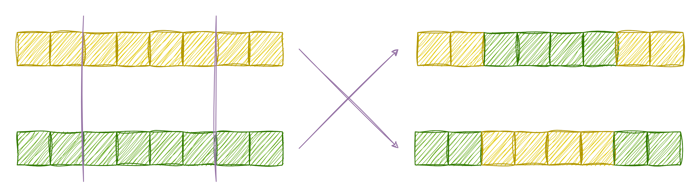

Crossover

The same is for crossover: here for both neurons and weights I chosen two techniques

- exchange randomly chosen neurons or weights

- exchange neurons or weights from random interval

What’s next?

That’s basically it about math tooling related to current simulation context. Next posts will be related to other aspects of the simulation.